Overview

Data cleansing, also known as data cleaning, is a crucial process that involves identifying and correcting inaccuracies in data to ensure its quality and reliability. It plays a central role in data analytics, machine learning (ML), and data science. Clean data is fundamental for accurate analysis and decision-making. Without proper data cleansing, the risk of drawing incorrect conclusions or making decisions based on faulty data significantly increases.

Moreover, data cleansing is a continuous process that needs to be integrated into daily data management operations. As new data is constantly being generated, collected, and integrated from multiple sources, the potential for data errors and inconsistencies grows. Regularly cleansing data ensures that datasets remain accurate, relevant, and valuable over time, supporting ongoing analytics and ML projects.

What is data cleansing?

Data cleansing involves the meticulous task of identifying and correcting inaccuracies and inconsistencies in data to ensure its quality and reliability. This process is business crucial as it directly impacts decision-making, operational efficiency, and customer satisfaction. By engaging in data cleansing, companies can harness the full potential of their data, making it an asset for strategic planning and building a competitive advantage.

The importance of data cleansing extends beyond just maintaining data quality. It plays a central role in various data-intensive fields, such as data analytics, machine learning, and data science. Clean data is fundamental for accurate analysis, model building, and algorithm training, directly influencing the outcomes and insights derived from data. Without proper data cleansing, the risk of drawing incorrect conclusions or making misguided decisions based on faulty data significantly increases.

Furthermore, data cleansing is not a one-time task but a continuous process that needs to be integrated into the daily operations of data management. As new data is constantly being generated, collected, and integrated from multiple sources, the potential for data errors and inconsistencies grows. Regularly cleansing data ensures that datasets remain accurate, relevant, and valuable over time—supporting ongoing analytics and machine learning projects.

What is the importance of data cleansing?

Data cleansing can ensure the integrity and usability of data. In data analytics and machine learning, the adage "garbage in, garbage out" is particularly relevant. High-quality, clean data is the cornerstone of accurate analytics, enabling organizations to derive meaningful insights that inform strategic decisions. Without data cleansing, businesses risk making decisions based on inaccurate or misleading data, potentially leading to detrimental outcomes. Therefore, investing time and resources into data cleansing is not just about maintaining data quality; it's about safeguarding the foundation upon which important decisions are made.

Moreover, data cleansing directly contributes to enhancing data quality, which, in turn, significantly impacts the efficiency and effectiveness of machine learning models. Machine learning algorithms rely on clean, structured, and relevant data to learn and make predictions. Any anomalies, such as missing data or duplicate data, can skew the results and compromise the model's performance. By ensuring the data is cleansed, organizations can improve the accuracy of their machine learning models, leading to more reliable predictions and insights.

Lastly, data cleansing plays a role in maintaining data governance and compliance standards. With increasing regulations around data privacy and protection, organizations must ensure their data is accurate, up to date, and free from any errors that could lead to compliance issues. Data cleansing helps in identifying and rectifying any inaccuracies in data, thereby supporting an organization's data governance framework and ensuring adherence to regulatory requirements. This not only protects the organization from potential legal and financial penalties, but also builds trust with customers and stakeholders by demonstrating a commitment to data quality and integrity.

How to cleanse data: A step-by-step process

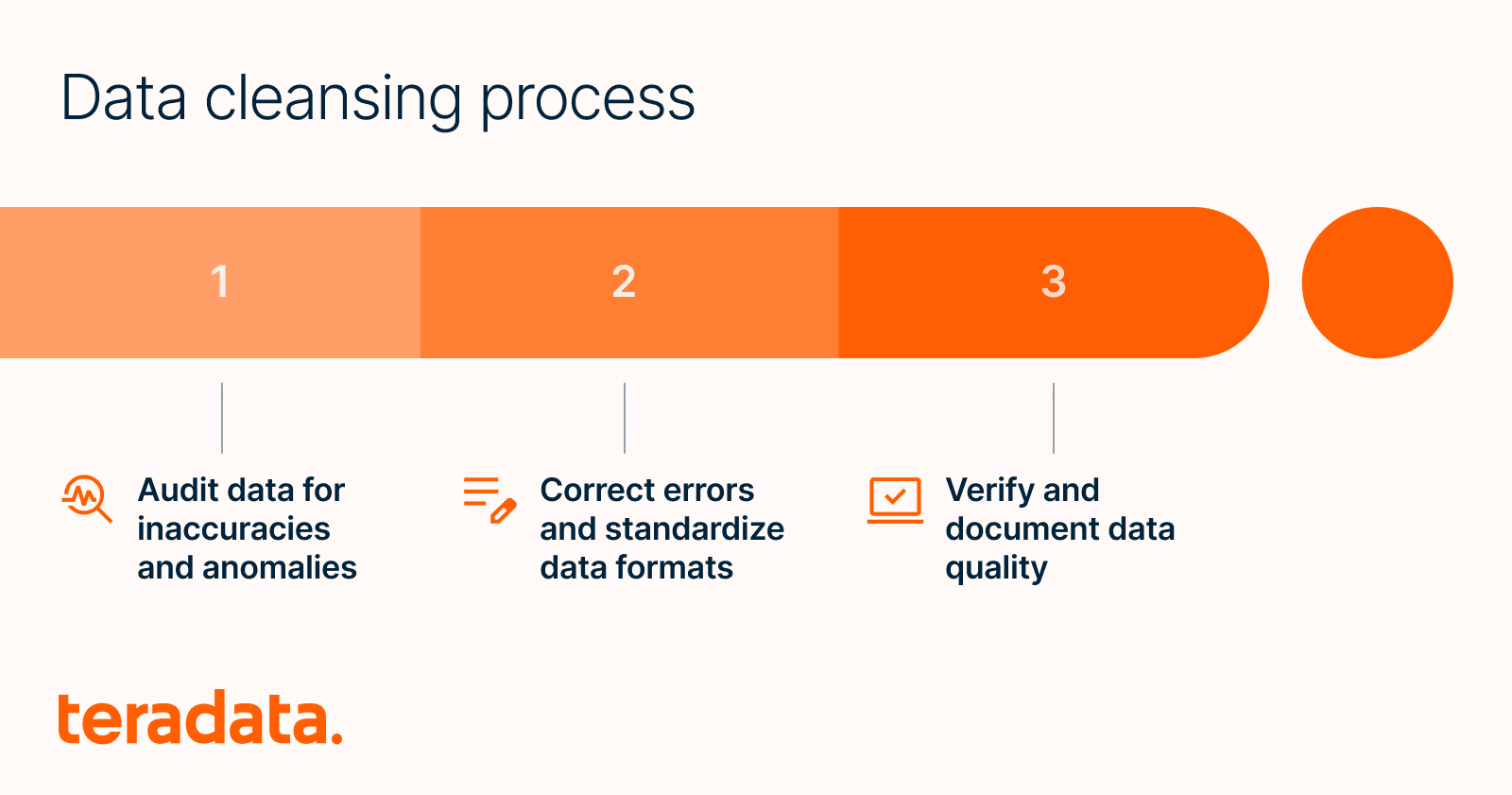

The data cleansing process involves several critical steps to ensure data quality and reliability. The first step is data auditing, where the existing data is meticulously examined for inaccuracies, inconsistencies, and anomalies. This involves using statistical methods and data cleansing tools to identify areas of concern, such as missing values, duplicate data, or irrelevant data. By thoroughly auditing the data, organizations can gain a comprehensive understanding of the quality of their data and the specific issues that need to be addressed.

Following the audit, the next step is to cleanse the data by correcting or removing the inaccuracies and inconsistencies identified. This may involve tasks such as filling in missing values, correcting data entry errors, removing duplicate records, and standardizing data formats. For instance, missing data can be addressed by inputting average values or using more sophisticated data imputation techniques, while duplicate data can be identified and removed using data cleansing tools designed for this purpose. This step is necessary for improving the overall quality of the dataset, making it more reliable and useful for analysis.

The final step in the data cleansing process is verification, where the cleansed data is reviewed to ensure that all issues have been adequately addressed and that no new errors have been introduced during the cleansing process. This may involve a combination of manual review and automated checks using data cleansing tools. Additionally, it's important to document the data cleansing process, including the issues identified, the actions taken to address them, and the results of the verification step. This documentation is valuable for understanding the quality of the dataset and for guiding future data cleansing efforts.

By following these steps, organizations can effectively cleanse their data, enhancing its quality and utility for data analytics, machine learning, and other data-driven initiatives.

Components of quality data

Quality data consists of:

- Validity: The degree to which data conforms to predefined standards, rules, or constraints, making it trustworthy and suitable for its intended purpose

- Accuracy: Ensures data is error free and precisely reflects the truth of data values

- Completeness: The extent to which all required and expected data elements are present within a dataset

- Consistency: Uniformity and accuracy of data within and across datasets

- Uniformity: Ensures the standardization of data formats and structures across datasets

Data cleansing tools

Data managers must leverage the right data cleansing tools to improve data quality efficiently and effectively. These tools are designed to automate many aspects of the data cleansing process, from identifying errors and inconsistencies to correcting or removing them. Specifically, such tools should offer advanced features for data cleaning, including data profiling, data deduplication, and data standardization. These capabilities enable organizations to cleanse large datasets more quickly and accurately than would be possible through manual efforts alone.

Another important category of data cleansing tools focuses on specific aspects of the data cleansing process, such as data scrubbing and data transformation. Data scrubbing tools are specialized in detecting and correcting errors in data, while data transformation tools are used to convert data from one format or structure to another—ensuring consistency across datasets. Tools like Google Sheets also offer basic data cleansing functionalities, such as removing duplicates or filling in missing values, which can be particularly useful for smaller datasets or simpler data cleansing tasks.

Moreover, the integration of data cleansing tools with other data management and analytics platforms can significantly enhance the efficiency of the data cleansing process. For example, data cleansing services can be integrated with cloud computing platforms, data warehouses, and data analytics software to streamline the flow of clean data across systems. This integration facilitates a more seamless data management process, from data entry and cleansing to analysis and reporting.

By selecting and effectively utilizing the appropriate data cleansing tools, organizations can ensure their data is of the highest quality, supporting accurate analytics and informed decision-making.

Data cleansing FAQs

What happens if data is not cleansed?

What happens if data is not cleansed?

Neglecting data cleansing can lead to a host of issues, including compromised data quality, inaccurate analytics, and flawed decision-making. Dirty data—or data that is incorrect, incomplete, or irrelevant—can skew analytics results, leading to misleading insights. This can have significant implications for business strategies, operational efficiency, and customer relationships.

Furthermore, unclean data can hinder the performance of machine learning models, reducing their accuracy and reliability. In essence, without regular data cleansing, organizations risk basing their decisions on faulty data, which can have far-reaching negative consequences.

How often should data be cleansed?

How often should data be cleansed?

The frequency of data cleansing depends on several factors, including the volume of data, the rate at which new data is generated, and the specific needs of the organization. In general, data should be cleansed regularly to ensure its quality and relevance. For businesses dealing with large volumes of data or rapidly changing datasets, this may mean performing data cleansing on a daily or weekly basis. For others, a monthly or quarterly cleansing schedule may be sufficient.

Ultimately, the goal is to maintain a consistent level of data quality that supports accurate analytics and informed decision-making.

Can data cleansing improve machine learning models?

Can data cleansing improve machine learning models?

Yes, data cleansing can significantly improve the performance of machine learning models. Machine learning algorithms rely on high-quality, relevant data to learn patterns and make predictions. Cleansing the data of inaccuracies, inconsistencies, and irrelevant information ensures that the model is trained on clean, reliable data, which enhances its ability to generate accurate predictions.

Moreover, data cleansing can help in identifying and removing biased data, further improving the fairness and objectivity of the model's outcomes. Therefore, data cleansing is a critical step in the preparation of data for machine learning, directly impacting the success of these models.